CUDA (or Compute Unified Device Architecture) is a parallel computing platform and application programming interface (API) that allows software to use certain types of graphics processing units (GPUs) for general purpose processing, an approach called general-purpose computing on GPUs (GPGPU). CUDA is a software layer that gives direct access to the GPU's virtual instruction set and parallel computational elements, for the execution of compute kernels.

CUDA is designed to work with programming languages such as C, C++, and Fortran. This accessibility makes it easier for specialists in parallel programming to use GPU resources, in contrast to prior APIs like Direct3D and OpenGL, which required advanced skills in graphics programming. CUDA-powered GPUs also support programming frameworks such as OpenMP, OpenACC and OpenCL; and HIP by compiling such code to CUDA.

CUDA was created by Nvidia. When it was first introduced, the name was an acronym for Compute Unified Device Architecture, but Nvidia later dropped the common use of the acronym.

What Is CUDA Cores

CUDA, which stands for Compute Unified Device Architecture, Cores are the Nvidia GPU equivalent of CPU cores that have been designed to take on multiple calculations at the same time, which is significant when you’re playing a graphically demanding game.

One CUDA Core is very similar to a CPU Core. Generally, CUDA Cores are not as developed, though they are implemented in much greater numbers, with your standard gaming CPU coming with up to 16 cores, while CUDA Cores can easily get into the hundreds.

High-end CUDA Cores can come in the thousands, with the purpose of efficient and speedy parallel computing since more CUDA Cores mean more data can be processed in parallel.

CUDA Cores can also only be found on Nvidia GPUs from the G8X series onwards, including the GeForce, Quadro and Telsa lines. It will work with most operating systems.

Cuda Programming

Example of CUDA processing flow

Copy data from main memory to GPU memory

CPU initiates the GPU compute kernel

GPU's CUDA cores execute the kernel in parallel

Copy the resulting data from GPU memory to main memory

The CUDA platform is accessible to software developers through CUDA-accelerated libraries, compiler directives such as OpenACC, and extensions to industry-standard programming languages including C, C++ and Fortran. C/C++ programmers can use 'CUDA C/C++', compiled to PTX with nvcc, Nvidia's LLVM-based C/C++ compiler, or by clang itself.[6] Fortran programmers can use 'CUDA Fortran', compiled with the PGI CUDA Fortran compiler from The Portland Group.

In addition to libraries, compiler directives, CUDA C/C++ and CUDA Fortran, the CUDA platform supports other computational interfaces, including the Khronos Group's OpenCL,[7] Microsoft's DirectCompute, OpenGL Compute Shader and C++ AMP.[8] Third party wrappers are also available for Python, Perl, Fortran, Java, Ruby, Lua, Common Lisp, Haskell, R, MATLAB, IDL, Julia, and native support in Mathematica.

In the computer game industry, GPUs are used for graphics rendering, and for game physics calculations (physical effects such as debris, smoke, fire, fluids); examples include PhysX and Bullet. CUDA has also been used to accelerate non-graphical applications in computational biology, cryptography and other fields by an order of magnitude or more.[9][10][11][12][13]

CUDA provides both a low level API (CUDA Driver API, non single-source) and a higher level API (CUDA Runtime API, single-source). The initial CUDA SDK was made public on 15 February 2007, for Microsoft Windows and Linux. Mac OS X support was later added in version 2.0,[14] which supersedes the beta released February 14, 2008.[15] CUDA works with all Nvidia GPUs from the G8x series onwards, including GeForce, Quadro and the Tesla line. CUDA is compatible with most standard operating systems.

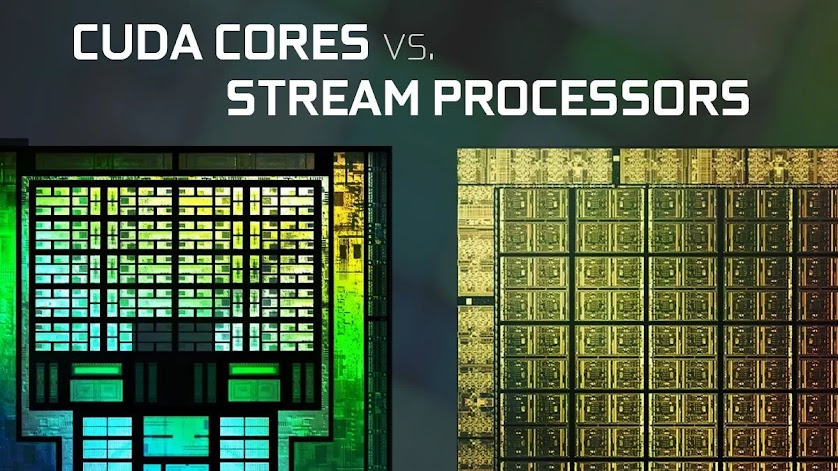

CUDA Cores and Stream Processors

What Nvidia calls “CUDA” encompasses more than just the physical cores on a GPU. CUDA also includes a programming language made specifically for Nvidia graphics cards so that developers can more efficiently maximize usage of Nvidia GPUs. CUDA is responsible everything you see in-game—from computing lighting and shading, to rendering your character’s model.

A GeForce video card, depending on family or generation, can have anywhere from several hundred to several thousand CUDA cores. The same goes for AMD and their Stream Processors. These functionally perform the same task and can be used as a metric of performance. Ultimately, both CUDA cores and Streaming Processors operate in a strictly computational capacity while a CPU core not only calculates, but also fetches from memory and decodes

Both CUDA Cores and Stream Processors are good metrics to look at when comparing inside the same family. For instance, the Radeon RX 6800 and 6900 XT have 3840 and 5120 Stream Processors respectively. And since they both belong to the same GPU architecture family, the number of Streaming Processors directly ties to performance.

No comments:

Post a Comment