Tiger Lake is Intel's codename for the 11th generation Intel Core mobile processors based on the new Willow Cove Core microarchitecture, manufactured using Intel's third-generation 10 nm process node known as 10SF ("10 nm SuperFin"). Tiger Lake replaces the Ice Lake family of mobile processors, representing an Optimization step in Intel's process–architecture–optimization model.

Tiger Lake processors launched on September 2, 2020, are part of the Tiger Lake-U family and include dual-core and quad-core 9 W (7–15 W) TDP and 15 W (12–28 W) TDP models. They power 2020 "Project Athena" laptops. The quad-core 96 EU die measures 13.6 × 10.7 mm (146.1 mm2), which is 19.2% wider than the 11.4 × 10.7 mm (122.5 mm2) quad-core 64 EU Ice Lake die. The 8-core 32 EU die used in Tiger Lake-H is around 190 mm2.[8] According to Yehuda Nissan and his team, the architecture is named after a lake across Puget Sound, Washington.[9] Laptops based on Tiger Lake started to sell in October 2020.

The Tiger Lake-H35 processors were launched on January 11, 2021. These quad-core processors are designed for "ultraportable gaming" laptops with 28-35 W TDP. Intel also announced that the Tiger Lake-H processors with 45 W TDP and up to eight cores will become available in Q1 2021. Intel officially launched 11th Gen Intel Core-H series on May 11, 2021[13] and announced 11th Gen Intel Core Tiger Lake Refresh series on May 30, 2021.

Tiger Lake Processors Line-up's

Features

CPU

Further information: Willow Cove (microarchitecture)

Intel Willow Cove CPU cores

Full memory (RAM) encryption

Indirect branch tracking and CET shadow stack

Intel Key Locker

GPU

Intel Xe-LP ("Gen12") GPU with up to 96 execution units (50% uplift compared to Ice Lake, up from 64) with some yet to be announced processors using Intel's discrete GPU, DG1

Fixed-function hardware decoding for HEVC 12-bit, 4:2:2/4:4:4; VP9 12-bit 4:4:4 and AV1 8K 10-bit 4:2:0

Support for a single 8K 12-bit HDR display or two 4K 10-bit HDR displays

Hardware accelerated Dolby Vision

Sampler Feedback support

Dual Queue Support

IPU

Image Processing Unit, a special co-processor to improve image and video capture quality

Not available on embedded models

Initially there were 1165G7, 1135G7, 1125G4 and 1115G4 models with no IPU but later embedded processors were introduced instead

I/O

PCI Express 4.0 (Pentium and Celeron CPUs are limited to PCI Express 3.0)

Integrated Thunderbolt 4 (includes USB4)

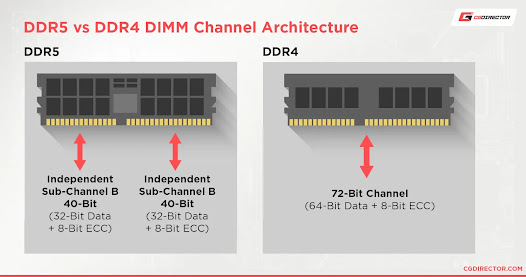

LPDDR4X-4267 memory support

LPDDR5-5400 "architecture capability" (Intel expected Tiger Lake products with LPDDR5 to be available around Q1 2021 but never released them)

Miniaturization of CPU and motherboard into an M.2 SSD-sized small circuit board

Intel Iris Xe Graphics G7 96EUs

Intel Iris Xe G7 96EUs

The Intel Xe Graphics G7 (Tiger-Lake U GPU with 96 EUs) is a integrated graphics card in the high end Tiger-Lake U CPUs (15 - 28 Watt). It is using the new Xe architecture (Gen12) and was introduced in September 2020. The GPU clocks with a base clock speed (guaranteed) of 400 MHz in all CPUs and can boost up to 1340 MHz (i7-1185G7). The slowest variant offers only 1100 MHz boost (i5-1130G7, 12 Watt TDP).

The performance depends on the TDP settings of the laptop and the used cooling. First informations show that the chip can be configured at 12 and 28 Watt TDP default (as the Ice Lake-U chips) and the performance should be around a dedicated GeForce MX350 in 3DMark benchmarks. For gaming we are expecting a bit worse performance due to the missing dedicated graphics memory and driver support. Many games e.g. had problems when testing the various laptops (e.g. Horizon Zero Dawn or Cyberpunk 2077 did not start or were crashing - see list below). Less demanding games like the Mass Effect Legendary Edition ran in medium settings fine. Compared to the older Ice Lake Iris Plus G7 GPU, the new Tiger Lake GPU should be approximately twice as fast. Therefore, the iGPU is still only for lowest graphical settings and low resolutions in demanding games.

The Tiger Lake SoCs and therefore the integrated GPU are manufactured in the modern 10nm+ (10nm SuperFin) process (improved 10nm process) at Intel and therefore should offer a very good efficiency.

%20(1)-page-005.jpg)

.jpg)

.jpg)

.jpg)