A solid-state drive (SSD) is a solid-state storage device that uses integrated circuit assemblies to store data persistently, typically using flash memory, and functioning as secondary storage in the hierarchy of computer storage. It is also sometimes called a semiconductor storage device, a solid-state device or a solid-state disk,[1] even though SSDs lack the physical spinning disks and movable read–write heads used in hard disk drives (HDDs) and floppy disks.

Compared with electromechanical drives, SSDs are typically more resistant to physical shock, run silently, and have higher input/output rates and lower latency. SSDs store data in semiconductor cells. As of 2019, cells can contain between 1 and 4 bits of data. SSD storage devices vary in their properties according to the number of bits stored in each cell, with single-bit cells ("Single Level Cells" or "SLC") being generally the most reliable, durable, fast, and expensive type, compared with 2- and 3-bit cells ("Multi-Level Cells/MLC" and "Triple-Level Cells/TLC"), and finally quad-bit cells ("QLC") being used for consumer devices that do not require such extreme properties and are the cheapest per gigabyte of the four. In addition, 3D XPoint memory (sold by Intel under the Optane brand) stores data by changing the electrical resistance of cells instead of storing electrical charges in cells, and SSDs made from RAM can be used for high speed, when data persistence after power loss is not required, or may use battery power to retain data when its usual power source is unavailable.[4] Hybrid drives or solid-state hybrid drives (SSHDs), such as Apple's Fusion Drive, combine features of SSDs and HDDs in the same unit using both flash memory and spinning magnetic disks in order to improve the performance of frequently-accessed data. Bcache achieves a similar effect purely in software, using combinations of dedicated regular SSDs and HDDs.

Highlighted:

Flash memory

Most SSD manufacturers use non-volatile NAND flash memory in the construction of their SSDs because of the lower cost compared with DRAM and the ability to retain the data without a constant power supply, ensuring data persistence through sudden power outages. Flash memory SSDs were initially slower than DRAM solutions, and some early designs were even slower than HDDs after continued use. This problem was resolved by controllers that came out in 2009 and later.

Flash-based SSDs store data in metal-oxide-semiconductor (MOS) integrated circuit chips which contain non-volatile floating-gate memory cells.[89] Flash memory-based solutions are typically packaged in standard disk drive form factors (1.8-, 2.5-, and 3.5-inch), but also in smaller more compact form factors, such as the M.2 form factor, made possible by the small size of flash memory.

Lower-priced drives usually use quad-level cell (QLC), triple-level cell (TLC) or multi-level cell (MLC) flash memory, which is slower and less reliable than single-level cell (SLC) flash memory.[90][91] This can be mitigated or even reversed by the internal design structure of the SSD, such as interleaving, changes to writing algorithms, and higher over-provisioning (more excess capacity) with which the wear-leveling algorithms can work.

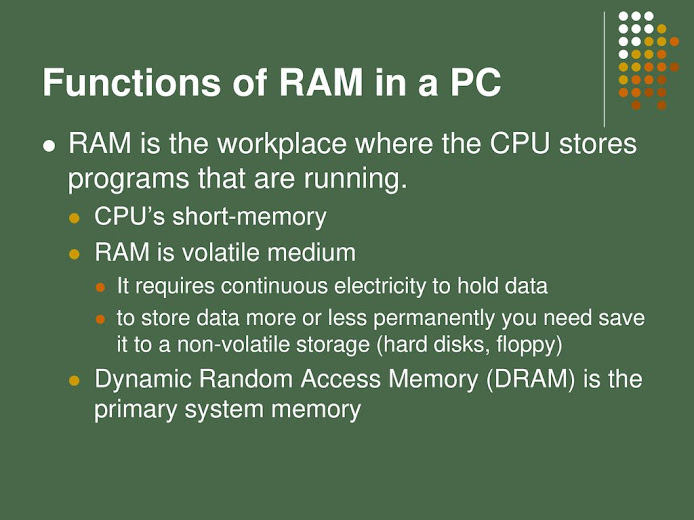

DRAM

SSDs based on volatile memory such as DRAM are characterized by very fast data access, generally less than 10 microseconds, and are used primarily to accelerate applications that would otherwise be held back by the latency of flash SSDs or traditional HDDs.

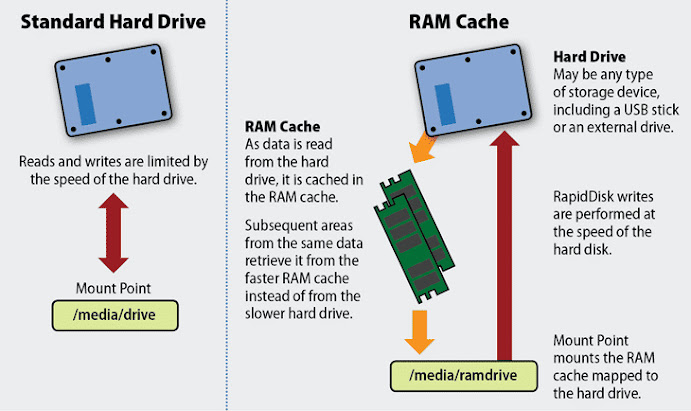

DRAM-based SSDs usually incorporate either an internal battery or an external AC/DC adapter and backup storage systems to ensure data persistence while no power is being supplied to the drive from external sources. If power is lost, the battery provides power while all information is copied from random access memory (RAM) to back-up storage. When the power is restored, the information is copied back to the RAM from the back-up storage, and the SSD resumes normal operation (similar to the hibernate function used in modern operating systems).[96][97]

SSDs of this type are usually fitted with DRAM modules of the same type used in regular PCs and servers, which can be swapped out and replaced by larger modules. Such as i-RAM, HyperOs HyperDrive, DDRdrive X1, etc. Some manufacturers of DRAM SSDs solder the DRAM chips directly to the drive, and do not intend the chips to be swapped out—such as ZeusRAM, Aeon Drive, etc.

A remote, indirect memory-access disk (RIndMA Disk) uses a secondary computer with a fast network or (direct) Infiniband connection to act like a RAM-based SSD, but the new, faster, flash-memory based, SSDs already available in 2009 are making this option not as cost effective.

While the price of DRAM continues to fall, the price of Flash memory falls even faster. The "Flash becomes cheaper than DRAM" crossover point occurred approximately 2004.

3D XPoint

In 2015, Intel and Micron announced 3D XPoint as a new non-volatile memory technology. Intel released the first 3D XPoint-based drive (branded as Intel Optane SSD) in March 2017 starting with a data center product, Intel Optane SSD DC P4800X Series, and following with the client version, Intel Optane SSD 900P Series, in October 2017. Both products operate faster and with higher endurance than NAND-based SSDs, while the areal density is comparable at 128 gigabits per chip. For the price per bit, 3D XPoint is more expensive than NAND, but cheaper than DRAM.

Cache or buffer

A flash-based SSD typically uses a small amount of DRAM as a volatile cache, similar to the buffers in hard disk drives. A directory of block placement and wear leveling data is also kept in the cache while the drive is operating. One SSD controller manufacturer, SandForce, does not use an external DRAM cache on their designs but still achieves high performance. Such an elimination of the external DRAM reduces the power consumption and enables further size reduction of SSDs.

NVME SSD

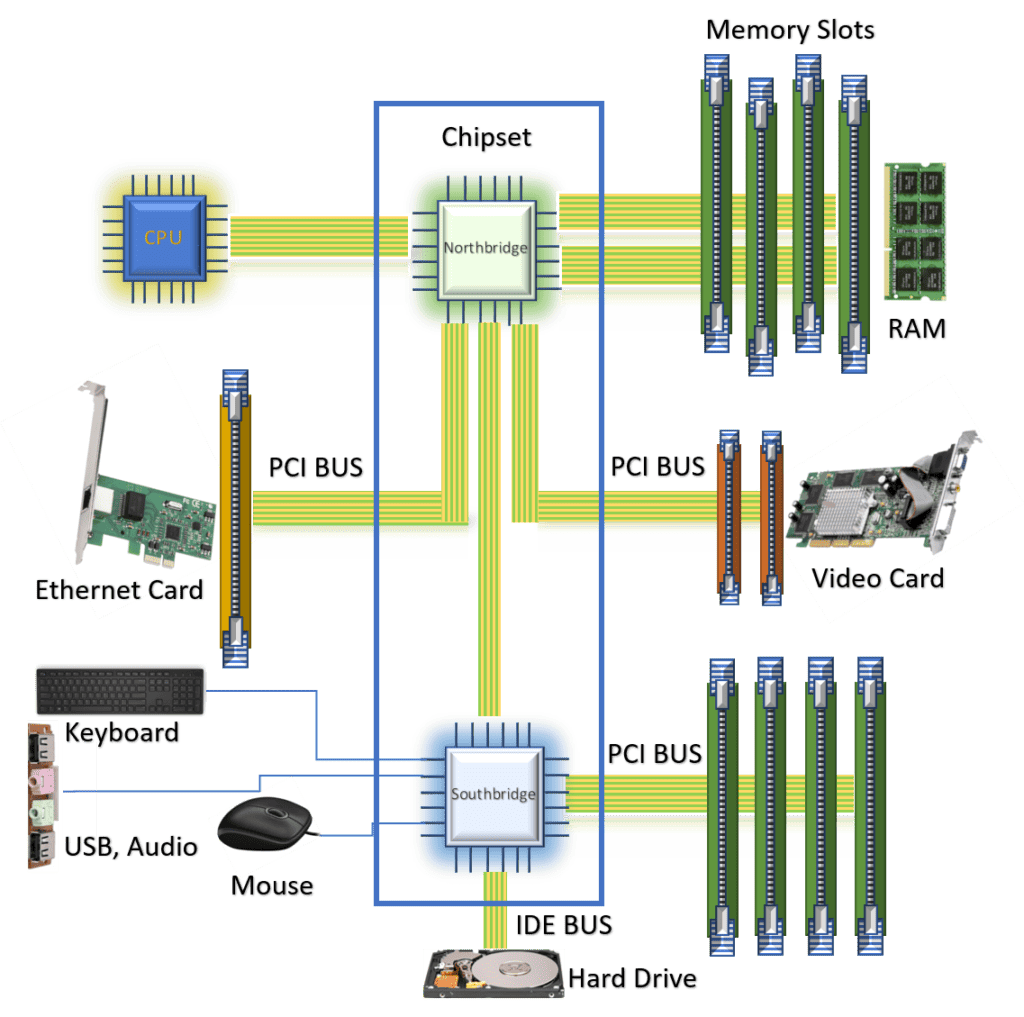

NVM Express (NVMe) or Non-Volatile Memory Host Controller Interface Specification (NVMHCIS) is an open, logical-device interface specification for accessing a computer's non-volatile storage media usually attached via PCI Express (PCIe) bus. The initialism NVM stands for non-volatile memory, which is often NAND flash memory that comes in several physical form factors, including solid-state drives (SSDs), PCI Express (PCIe) add-in cards, and M.2 cards, the successor to mSATA cards. NVM Express, as a logical-device interface, has been designed to capitalize on the low latency and internal parallelism of solid-state storage devices.

Architecturally, the logic for NVMe is physically stored within and executed by the NVMe controller chip that is physically co-located with the storage media, usually an SSD. Version changes for NVMe, e.g., 1.3 to 1.4, are incorporated within the storage media, and do not affect PCIe-compatible components such as motherboards and CPUs.

By its design, NVM Express allows host hardware and software to fully exploit the levels of parallelism possible in modern SSDs. As a result, NVM Express reduces I/O overhead and brings various performance improvements relative to previous logical-device interfaces, including multiple long command queues, and reduced latency. The previous interface protocols like AHCI were developed for use with far slower hard disk drives (HDD) where a very lengthy delay (relative to CPU operations) exists between a request and data transfer, where data speeds are much slower than RAM speeds, and where disk rotation and seek time give rise to further optimization requirements. NVM Express SSDs run hotter than 2.5" SATA SSDs and can quickly and easily reach temperatures in excess of 80 °C.

NVM Express devices are chiefly available in the form of standard-sized PCI Express expansion cards and as 2.5-inch form-factor devices that provide a four-lane PCI Express interface through the U.2 connector (formerly known as SFF-8639). Storage devices using SATA Express and the M.2 specification which support NVM Express as the logical-device interface are a popular use-case for NVMe and have become the dominant form of solid-state storage for servers, desktops, and laptops alike.

%20(1)-page-005.jpg)

.jpg)

.jpg)

.jpg)